Webex is continuously innovating on the hybrid work experience. Our team is leveraging artificial intelligence and deep-learning methods to deliver an exceptional collaboration experience – improving the audio and video experience, translation, and transcription, and expanding automated assistance. ai audio ai video

Our approach to artificial intelligence helps users feel seen, heard, and valued regardless of participating from home, the office, or on the go. We’ve designed our AI platform with the goal of offering an inclusive approach to collaboration, enabling participation with people from around the world, regardless of language, gender, or age. You can read about this approach in our recently published Cisco’s Responsible AI framework specifying the governance, process, and educational measures we go through to reduce or eliminate the biases that may emerge from algorithms or data sets.

Webex is uniquely positioned to offer an integrated and secure collaboration experience across our devices, desktops, laptops, smartphones, and emerging device formats. Our vision is to provide an experience that is as good as being there in person – or better. Our approach to AI delivers greater comprehension, reduces fatigue, and improves ease of use. We’re focused on using AI to raise the clarity of communication between participants and to be more adaptable to individual participant needs.

At Webex, we’ve architected our AI capabilities in a way that considers collaboration as a comprehensive whole rather than the typical AI-related technology silos.

Designed for complex hybrid work environments

Our goal is to apply machine learning intelligence to audio, video and natural language in a way that increases end-user adaptability and thrives in complex hybrid work environments.

Delivers an inclusive approach to collaboration

Our ML models are trained with large, diverse data sets to recognize a wide range of languages and accents for speech and demographics for computer vision.

Built with privacy and security at its core

Our technology architecture inherently delivers a private and secure approach to machine learning computation by processing primarily on the end-user’s laptops and devices rather than transmitting to the cloud. This edge-computing approach to AI technology inherently improves security for media assets and data. Webex’s approach to AI development is based on Cisco’s Data Trust Principles.

A low-latency collaboration experience

The edge-centric approach also delivers a responsive experience because media can be processed in tens of milliseconds, whereas round trips through the cloud introduce highly variable latency. And our algorithms are designed in a way that can scale to different processor types, power budgets and operating systems – ensuring a work-from-anywhere experience.

Streamlines the collaboration experience on Webex devices

Webex AI technologies work in the background to produce a better overall experience and are directly integrated into Webex desk phones, desktops, and conference room devices.

Delivers opportunities for continuous innovation

Webex’s comprehensive and collaboration-focused AI architecture enriches the media experience and opens new opportunities to quickly adapt to new use cases and customer-specific domains.

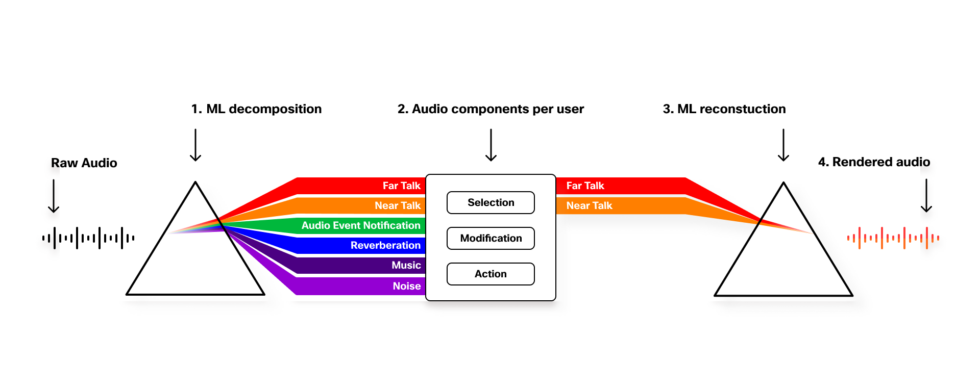

Webex’s AI-powered approach to media stream analysis and reconstruction

Webex customers rely on our portfolio to collaborate from anywhere. Our approach is to identify the participant’s characteristics regardless of their environments, then leverage AI and machine learning (ML) to separate incoming audio or video into segmented data-rich streams. This level of detailed extraction of significant component streams has previously not been possible in widely deployed real-time communications software.

1. ML-powered decomposition

The decomposition separates an incoming audio stream including:

- Foreground and background speakers are identified by estimating the distance a speaker is from the microphone based on the speech level and the reverberation of the speech

- Audio events are detected including specific sound triggers or keywords.

- Reverberation, the subtle echoes of a voice in a room, is separated and can be adjusted to clarify the participant’s voice.

- Background music is separated into its own stream, enabling volume adjustments in the recomposition stage.

- Background noise is separated from and can include ambient elements that may be adjusted depending on the use case.

2. Audio components per user

Once the data streams are separated, we aggregate them into audio components per user, enabling us to individually select, modify or take actions on each participant’s audio stream.

3. ML-powered reconstruction

Based on the use case, we can combine individual data streams back into the audio shared with others. This approach enables us to serve various use cases and requirements. For example, the Webex smart audio feature enables participants to select whether they want to remove all background noise (Noise removal), remove all background noise and background speech (Optimize for my voice), or hear the original sound when you play an instrument or sing (Music mode).

4. Rendered audio

The resulting audio stream is delivered to other participants in a way that is easier to comprehend with less mental effort.

Computational advantage enables new use cases

Since our AI architecture is focused on collaboration, we can separate the media in one computational cycle rather than processing the media stream multiple times through different models. This approach increases the overall efficiency of the process and delivers a low-latency experience. We can also easily add new components to the stream computational cycle, potentially enabling new usage scenarios with a richer picture of the input stream. The Webex media-stream processing approach expands to:

Voice recognition enhances comprehension

We can distinguish talkers from noise, talkers that are closer or further from the microphone and even adjust the room reverberation. All these elements are identified as separate streams, enabling greater flexibility to serve a specific user need. We can individually select, modify, and take actions on these streams and reconstruct new audio streams from selected audio components. For example, in one call, we might want to equalize the volume of talkers in the foreground or background, and in others, we may just want to highlight the speaker closest to the microphone. We can also recognize audio event triggers like “OK Webex” or highlight other environmental audio that may be important to a participant.

Machine vision expands the power of video streams

Our media stream approach enables us to have a richer understanding of the video scene and recompose elements to improve the video quality. For example, we can distinguish a participant from their background and the gestures they are using. We can render the video by selecting and modifying these separate streams to maximize how well other participants see the presenter, with minimum distractions. This approach opens a world of possibilities and makes it easier for participants to collaborate from even difficult environments.

Webex Assistant enhances the collaboration experience

The Webex Assistant provides voice controls the collaboration experience, proactive intelligence, transcription, and translation services. We’ve implemented this in a way where we’re processing more of the language recognition on the device, increasing accuracy and reducing latency by up to 4x compared to standard cloud-based systems. Webex Assistant also offers APIs with Webex Assistant skills, so that third-party developers can add new functionality and connect to their applications with voice controls. We’ve expanded the number of supported languages for transcription, translations, and even added additional languages for Devices including English (existing), German, French, Spanish, and Japanese.

Machine vision opens 3D-powered frontiers

Computer vision enables the identification of the spatial environment in a video stream. The Webex approach to 3D focuses on reducing the cognitive load for presenters and participants rather than requiring AR/VR headsets to embody them in an entirely virtual reality space. For example, we can extract accurate 3D models and adaptation. We can also scan the facial geometry of the participants to allow image enhancement and personalization.

The Webex approach to AI enables teams to collaborate with increased flexibility and expands how people can participate in meetings. The robust AI-powered media stream processing model today delivers a world-class collaboration experience today and opens new frontiers for tomorrow.

Want to experience the difference AI technology makes to collaboration? Contact us today for a demo.

Learn more