In this 2nd blog of the Real-Time Media Primer series, we’ll share some basics on audio in real-time conferencing.

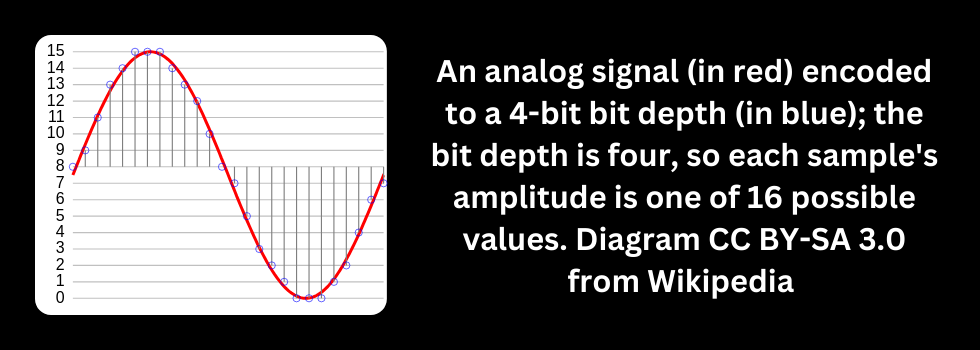

Audio is an analog phenomenon, but when recording it we need to translate it into a digital approximation. When doing so, there are two key values, the sampling rate and the bit depth. The sampling rate is how many times the analog waveform is sampled per second, while the bit depth is the number of bits available to represent the range of possible values.

The Nyquist-Shannon theorem also called the sampling theorem, says that the sampling rate must be at least twice the frequency to be represented. Humans can generally hear up to the ~22,000Hz range, so sampling rates of CDs and other high-fidelity formats generally target a sampling rate of >44,000Hz. However, the frequency range of most human speech is only up to ~3,000-4,000Hz, and so audio codecs optimized only for voice can have sampling rates of just 6,000-8,000Hz. Audio codecs are often categorized as:

- Narrowband: Sampling for the most basic range of understandable human speech, often between ~300Hz to ~3400Hz.

- Wideband: Sampling for the full range of human speech, from 50Hz to ~7000Hz

- Fullband: Sampling for music and any other audible sounds, from 50Hz to ~22,000Hz

While the sampling rate defines the frequencies that a codec can encode, the bit depth is one of the key factors in how good it will sound – the more bits available to encode the height of the waveform at a particular point, the better the fidelity (closeness to the original analog waveform) the codec can have, though at the cost of larger payloads. Simpler codecs use 8 bits per sample, which sounds noticeably poor to most listeners. Audio CDs use 16 bits, which is ‘good enough’ for many listeners, but modern high-fidelity codecs targeting high levels of fidelity will use 24 or even 32 bits per sample.

Different codecs can use different methods of compression to achieve different bitrates for the same sampling rate and bit depth; as a rule, achieving a lower bandwidth for a given level of quality will require additional CPU.

One other choice for audio codecs is their payload size/sample rate – how many milliseconds of audio a given audio packet contains. A smaller payload size, with more packets per second, allows for lower latencies but decreases bandwidth efficiency as more bits must be devoted to packet overhead per second. The most common payload size for audio codecs is 20ms, or 50 packets per second, though modern codecs may support multiple audio rates.

6 Widely Supported Audio Codecs

Real-time voice-over-IP calling and conferencing has included, over the years, a bewildering array of different audio codecs. Different calling protocols and use cases tend to have their own set of codecs which may or may not partially overlap with the domain of other use cases and protocols. It is highly recommended that any implementor deciding what audio codecs to support surveys the field they are targeting to identify the current set of best-supported codecs before doing so, particularly if they currently or may in the future have any plans for interoperability.

Some key facts about some of the most widely supported audio codecs in video conferencing are listed below:

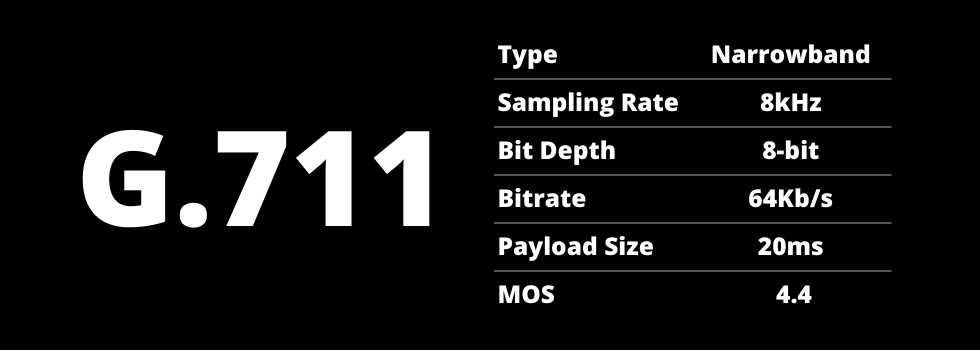

1) G.711

G.711 is one of the most basic audio codecs in existence and one of the best supported. Its quality is poor, referred to as toll quality, equivalent to a traditional PSTN long-distance line, and requires 64Kb/s for that quality as it has no compression at all. However, it is extremely simple to implement and has extremely low CPU requirements, making it very cheap to implement and deploy.

It is still widely used when gatewaying from PSTN to IP (as the intrinsic quality of PSTN means G.711 is often sufficient), and is supported in most other products, even modern ones, as it serves as a universal ‘lowest common denominator’ that, while not desirable, helps with interoperability. Even WebRTC (Web Real-Time Communication, used for audio and video calling in browsers) mandates G.711 support for this reason, despite this requirement being standardized in 2016. Implementations are recommended to include G.711 support as it is easy to implement and can be valuable for both interoperability and debugging.

Note that there are two different variants of G.711: μ-law (rendered in ASCII as u-law) and A-law. The two variants use slightly different methods of companding (an early form of compression and then expansion of the dynamic range), with μ-law supporting a slightly larger dynamic range but sounding slightly worse than A-law when handling low-volume signals. μ-law is most commonly used in North America and Japan, while A-law is more common in other regions. Implementations should support and implement both.

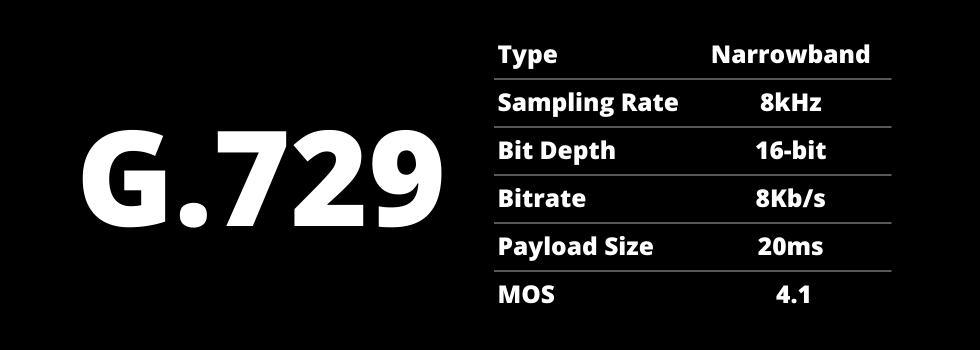

2) G.729

G.729 is an older codec that seeks to provide a similar quality level to G.711 but at a fraction of the bitrate. Note that, while having the same sampling rate and better bit depth than G.711, its lossy compression means that its quality is actually slightly worse than G.711, but it has a far lower bitrate. Modern codecs can achieve much higher levels of quality at similar bitrates, so G.729 is primarily used by older systems or those with very limited computational power.

The base G.729 codec has been extended with various features, primarily Annex A (less computational expense for a minor decrease in quality) and Annex B (silence suppression), so a G.729 stream can be G.729, G.729A, G.729B or G.729AB. However, while Annex A is compatible with the original implementation and hence does not need to be negotiated, Annex B is not – both sides must agree on whether to use it or not. This is included in the SDP (Session Description Protocol) negotiation for the codec; most modern implementations that offer G.729 support Annex B.

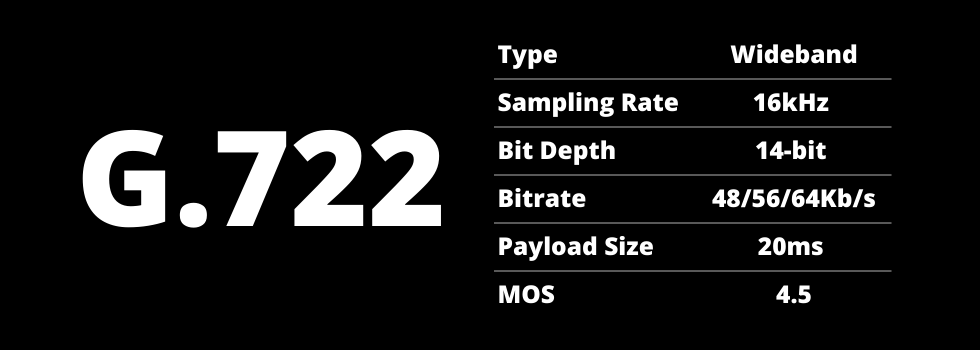

3) G.722

G.722 is an older wideband codec that, as G.729 sought to provide G.711-quality audio at much higher compression rates, instead aims to deliver much higher quality at a similar bitrate to G.711. G.722 is primarily used by older systems targeting a higher level of audio fidelity, though as more modern codecs can provide higher audio quality at lower bandwidths G.722 is primarily a concern for interoperability.

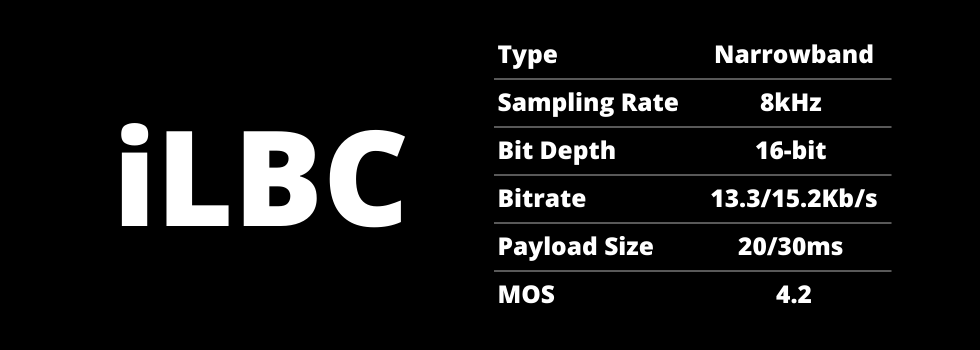

4) iLBC

iLBC, or internet Low Bitrate Codec, is a codec designed as a more modern replacement for G.729, providing notably higher quality with similar CPU requirements and only marginally high bitrates. It was also designed with built-in support for lost packets, as per the name it was designed to operate over the public internet as opposed to internal corporate networks, which tend to be more reliable. However, while it has found support in web voice applications it has limited adoption outside that domain.

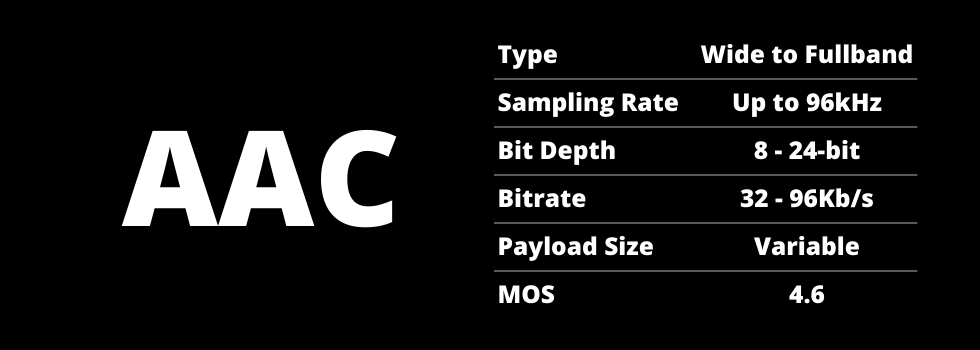

5) AAC

AAC, or Advanced Audio Codec, is a codec primarily designed for pre-recorded audio as a successor to MP3 that is used by a huge variety of modern applications. AAC actually includes a wide variety of different toolsets and modes of operation, one of which is AAC-LD, where LD stands for Low Delay. AAC-LD is optimized for real-time usage, and this is the variant of AAC that is supported by many SIP and proprietary video conferencing systems as a modern wideband or fullband codec to give high-quality audio. Note that AAC is a highly complex format, and is encumbered by patents, such that most video-conferencing software offering AAC-LD support does so via an implementation licensed from a separate entity such as Fraunhoffer.

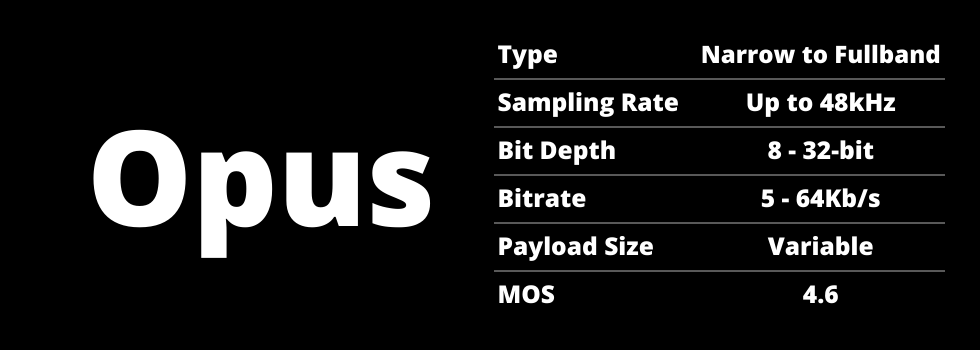

6) Opus

Opus is a modern audio codec designed as a ‘universal’ codec to address real-time use cases from very low bandwidth to high fidelity fullband, plus it’s patent-free and open-source. It also includes a number of tools that can be enabled during encoding such as native Forward Error Correction (FEC) to protect against loss (see the later blog entries on Media Resilience) and a mode to dramatically reduce bandwidth during periods of silence.

Unlike most other codecs that support different bandwidths and other features, such as AAC-LD, usage of Opus does not involve negotiating the operating point or tools in use: instead, while the receiver can provide ‘hints’, the sender is free to use the operating point and tools of their choice, and the receiver is required to handle them. For that reason, Opus decoders must implement the entire toolset to be compliant. Implementors are highly recommended to use the open-source libopus library to both encode and decode Opus. Opus is used in WebRTC and increasingly widely in other modern real-time media conferencing solutions.

Learn More: