This series focuses on SDP, the Session Description Protocol, the method by which almost all modern video-conferencing systems negotiate the contents of a call. We’ll start with an introduction and go into detail on various aspects of SDP and its usage in the next few blogs.

What is SDP?

When we place a call to another person, or to a server, the part of that most visible is the decision to make the call itself: to agree to communicate. But while the users are pressing the ‘make call’ or ‘answer call’ button, behind the scenes the clients and/or servers are conducting a much more detailed negotiation: how are we going to be communicating?

For example, are both sides going to send and receive audio, or is only one side going to send it? What codec are we going to use? How much bandwidth should we use? Where should we send the media packets? How are we going to encrypt the media? Establishing a call requires the two ends of the call to agree on these details and more.

While different protocols over the years have used a range of mechanisms for this negotiation, in the last decade or two one format has come to dominate this negotiation: the Session Description Protocol, or SDP. Deriving originally from a protocol developed in the early 1990s to send and receive media over an experimental multicast network built on top of the internet called the Mbone (Multicast Backbone), and standardized in 1998, SDP is at the core of both the key standards-based protocols used for real-time media conferencing today in SDP (Session Description Protocol) and WebRTC, and of proprietary systems such as Cisco Webex and Microsoft Teams.

SDP is sufficiently foundational that its specifications have been through several revisions. The current core specification for SDP itself is RFC8866, though as this was only published in 2021 most implementations are based on RFC4566 (the previous version). The other core specification is one that defines how SDP negotiation between two entities is performed, which is the basis of SDP’s usage in SIP, WebRTC, etc. This is currently defined in RFC3264, which defines the SDP Offer/Answer Model.

While SDP has accrued a huge number of extensions over the years, its underlying principles are very simple: it is a text-based protocol made up of a series of fields, one field on each line. A simple example of an SDP might look like this:

v=0

o=jdoe 3724394400 3724394405 IN IP4 198.51.100.1

s=-

b=AS:1920

c=IN IP4 198.51.100.1

t=0 0

m=audio 49170 RTP/AVP 108 101

a=rtpmap:114 opus/48000/2

a=fmtp:114 useinbandfec=1;stereo=1

a=rtpmap:101 telephone-event/8000

a=fmtp:101 0-15 Each field starts with a single ASCII character to identify its type, followed by the equals sign, followed by further text, the meaning of which is type-dependent. An SDP has a number of fields that describe the overall call (session in SDP terminology), followed by one or more “m” fields. Each of these “m=” line defines a separate media stream; all lines below that “m=” line pertain to that media stream until the next “m=” line is reached.

Per RFC8866, SDP uses Windows-style line endings with carriage returns as well as line feeds (\r\n), though implementations should accept UNIX-style line endings without issue.

As SDP was originally designed to publicly advertise media sessions available on the Mbone it is not, as designed, entirely suited to a negotiation between two entities. RFC 3264 defines how to use it in such a negotiation, in a handshake referred to as “Offer/Answer”, in which one side sends an SDP Offer, which the other side receives, parses, and then sends back an Answer which is shaped as a response to the Offer and completes the exchange. This RFC mostly adds additional constraints when creating the SDP but also relaxes some of the requirements of the original spec. Offers and Answers are referenced at times in the below; what these are will be explained in more detail in a later blog.

While SDP defines 14 field types, real-time conferencing tends to only be concerned with half of those. SDP was created to cover more than just real-time calling and has a range of time-related fields that allow the scheduling of when media will be available, when it will recur, etc that simply are not relevant to real-time conferencing. Of the fields used in conferencing, some are mostly vestigial, and in practice, there are just four key fields: “a”, “b”, “c,” and “m”.

Note that, when processing an SDP, parsers should ignore any field type they do not recognize and move on to the next line. This is a general principle in SDP design — elements that are not recognized should be ignored rather than cause a rejection of the SDP.

Beyond these, there are some other fields that are mandatory for SDP and we will begin with those, as they are always (and in our cases only) present at the start of SDPs.

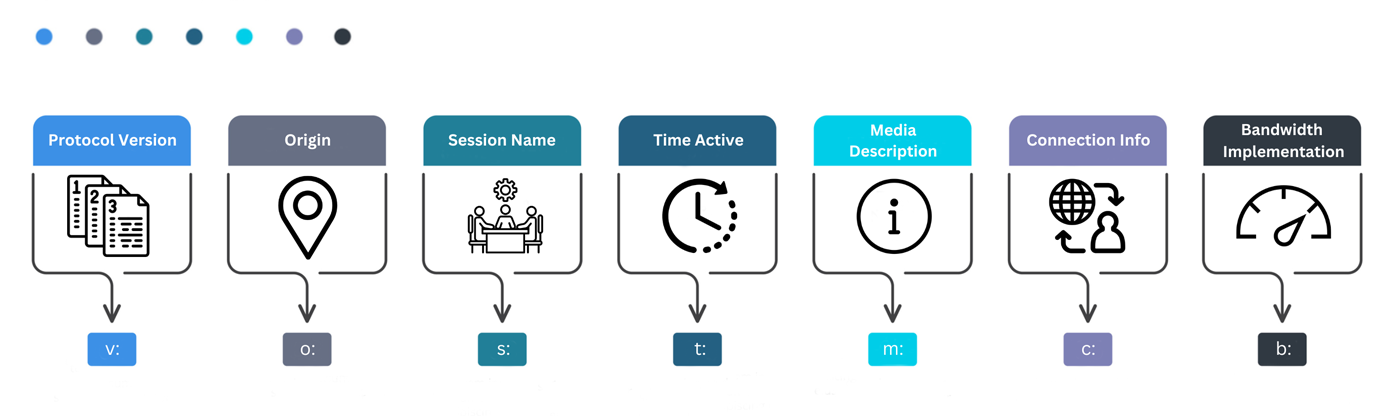

7 Field Types Essential for Video Conferencing

v: Protocol Version

In the decades since standardization, the protocol version is and remains 0, so this line is always v=0.

o: Origin

The Origin field is broken down into a set of space-separated subfields:

- Username: Despite the name this is most often consistent across endpoints of the same type and tends to be the device type, company name or some combination thereof. It can potentially be used for identification and interop but be wary that middleboxes such SIP B2BUAs (Back-2-Back User-Agents) can and sometimes do rewrite this value, so its usefulness is limited.

- Id: Some kind of number sufficiently unique that the same system won’t ever resend it. When implementing you should just make sure that the same endpoint won’t reuse the value at a later date, even after a reboot. A UNIX timestamp, ideally with milliseconds appended, is one of the most common solutions. Per RFC3264 the value should fit within a signed 64-bit number.

- Version: A sequence number that must increment each time you send out the SDP during the call in any way different from the previous time it was sent. Some protocols (such as the SIP session timer, RFC4028) can lead to an SDP needing to be sent even when it has not changed, and in those cases the version can remain the same. When receiving an SDP, if the version number is the same you can assume that the media information is unchanged. However, you must still respond to it as normal (eg, send an Answer if it is an Offer). Per RFC3264 the value should fit within a signed 64-bit number and the initial value should be less than (262)-1 to avoid rollovers.

- Network Type: Pretty much always ‘IN’, meaning internet.

- Address Type: ‘IP4’ means IPv4, ‘IP6’ means IPv6.

- Unicast Address: An address from the endpoint; can be a FQDN or an IP (the latter is used by the majority of implementations). Note that this is here primarily for uniqueness (rather like early versions of UUIDs) and should not be used for actual connectivity when processing; there are other fields for that. Note also that in certain circumstances using the actual address can be a privacy concern and leak information.

When writing an “o=” field you should ensure all of the elements above are included, as without them the SDP is technically invalid. However, when processing the SDP it is mostly only the version number that is of interest and even that is optional, as you can always choose to process an SDP if desired. When sending an SDP Answer to an SDP with an unchanged version number the Answer may also have an unincremented version number if the SDP is identical to the previous SDP sent, or it may be different.

Note that while the RFC mandates that a tuple of the elements of the origin field should be globally unique, implementations concerned with interoperability should not rely on this.

s: Session Name

While the field is mandatory according to the specification and is meant to be a text string describing the session it is mostly vestigial and should generally be “s=-“. However, in some cases, such as automated tests, it can be useful to populate this field. If you really wanted to fill the SDP with human-readable information there are also “i=” and “u=” fields defined in RFC8866, but these are very rarely used and could conceivably cause interoperability issues if used with third-party implementations.

t: Time Active

Originally designed to advertise multicast media sessions on the Mbone for anyone who wanted to receive them SDP has multiple field types for defining precisely when the media is available, none of which is relevant to the video conferencing use-case. Unfortunately, the specification mandates that the SDP still contains at least one “t=” line, which should be set to “t=0 0” because it isn’t relevant and should be ignored when parsing. Technically a “t=” line creates a time description block that affects the fields below it, and so the “t=” line should be the last element you write in the SDP header before the first “m=” line (see the section below).

m: Media Description

Now we have come to the first of the key fields for negotiating a real-time media call. The media description lines, or “m=” lines (pronounced ‘m lines’) for short, each mark a section of the SDP defining a type of media to be sent and/or received within the call. These are most often things like main audio, main video, content video, etc, but “m=” lines can also define data protocols sent over the media path such as BFCP or SCTP.

An important property of “m=” lines is that they define a media description block; all subsequent fields in the SDP up to the next “m=” line apply to that media description, allowing bandwidth limits, codecs etc to be defined for that media type. Fields from before the first “m=” line are called session attributes, as they apply to the entire session (call).

The “m=” line contains a number of space-separated subfields:

- Media Type: a string defining the media type of this “m=” line. “audio” and “video” are the most common, but there is also “application” which is used for data protocols such as BFCP, along with a few other potential values not generally used in video conferencing applications.

- Port: The RTP port to which the far end should send the media. This is an illustration of the fact that most fields in SDP define receive properties, though there are exceptions that can trip people up. By default, this also defines the RTCP port to one higher than the RTP port, with RTP ports being even-numbered and RTCP ports being odd-numbered. If you want to use an even-numbered port or want a non-continuous RTCP port (or a separate address) there is an “a=rtcp” attribute to do that (see later). The specification also allows for defining multiple receive ports for media streams with hierarchical encodings though you should not count on anyone supporting this.

- Transport Protocol: The protocol of the media to be sent/received. While seemingly straightforward, with “RTP/AVP” defining base RTP, this is actually one of the more finicky and problematic aspects of SDP when it comes to wide interoperability. The issue is that both encryption and RTCP-feedback have defined extensions to the transport protocol string that are not backward-compatible. How best to handle this will be covered in more detail in the section on implementing ‘best-effort’ encryption, but for now be aware that “RTP/AVP”, “RTP/SAVP”, “RTP/AVPF” and “RTP/SAVPF” are all possibilities. If you receive the far end’s SDP first the best bet for interoperability is usually to mirror their transport protocol in your SDP Answer and in all further SDPs you send. Note that if the media type is “application” the transport protocol will be something else, such as “UDP/DTLS/SCTP”.

- Media Format: Assuming that this is an audio or video “m=” line this next subfield is a string of space-separated numbers representing the payload types of the codecs supported in this “m=” line in order of preference, with the most preferred codec first in the list. Payload types are values between 0 and 127 that are included in the RTP headers of packets and can be used to map from the packet to its codec type (and hence how it should be decoded).

Note that although the codec list is in order of preference, the far end can choose to send any codec included in this list that they also included in the Offer/Answer exchange, and can switch between them at any point mid-call. Some old SIP endpoints and middleboxes used to attempt to simply matters by ‘locking down’ the codec choice to a single codec per “m=” line by only including a single codec in SDP Answers, though this behavior is not seen much these days.

Older codecs were assigned a static payload type in the 0-34 range. The IETF quickly realized this was not scalable, and newer codecs instead use a dynamic payload type in the range 96-127. Initial SDP Offers can pick their own dynamic payload types so long as they do not subsequently change it, but see the Offer/Answer section for best practices when it comes to picking them when sending an initial SDP Answer.

For non-RTP “m=” lines the format will be defined by the spec of the protocol in question; * is common if there is nothing relevant.

One odd-seeming requirement of the SDP spec is that, once an “m=” line is included in an SDP it can never be removed or re-ordered. For example, if Alice sends an SDP Offer containing two “m=” lines, one for audio and one for video to Bob, who only supports audio, Bob’s Answering SDP must still include two “m=” lines to match Alice’s, with the video “m=” line disabled. Subsequent SDP Offers sent by Bob must also still include these two “m=” lines for the remainder of the call.

An “m=” line is disabled if the port value is set to 0. Once that is done the later subfields don’t really matter but as a matter of validity a valid transport protocol should be used and at least one payload type value should be included for the media format. There should not be any other field types under the “m=” line; the next line in the SDP should be another “m=” line or the end of the SDP.

c: Connection Information

While the “o=” field does include an address this is only used for uniqueness and should never be used for connectivity. Instead, there is a specific “c=” field for defining this. The format is actually the same as is used in the “o=” field, with three subtypes:

- Network Type: Pretty much always ‘IN’, meaning internet.

- Address Type: ‘IP4’ means IPv4, ‘IP6’ means IPv6.

- Unicast Address: The address to which media should be sent. While the specification technically allows for this to be an FQDN this is in practice pretty much always an IP address. As should be expected if the address type is ‘IP4’ this should be an IPv4 address, if ‘IP6’ an IPv6 address.

Depending on where they are in the SDP “c=” lines are either session level or media level. A “c=” line that appears prior to the first “m=” line is a session-level field and applies to all “m=” lines in the SDP, while one that appears within a media description block applies only to that block. It is valid to have both, in which case the media-level field overrides the session-level value. While most endpoints will only ever set a session-level field SDP parsing implementations should generally have an address per media block and use the session-level field to populate that if there is no media-level field. An SDP must have either a session-level “c=” field or a media-level one in each media block to be valid.

b: Bandwidth Implementation

The bandwidth field defines the maximum bandwidth that the sender of the SDP is willing to receive. As with “c=” lines this can be a session-level or media-level field, but unlike “c=” lines they actually have somewhat different semantics that means it is not uncommon to have both session-level and media-level “b=” lines in the same SDP. A session-level bandwidth field gives the maximum bitrate of the entire call; the sum of all media streams sent should not exceed this bandwidth. A media-level “b=” line defines the maximum bandwidth of that specific media stream.

As such, an SDP that allows for both main and content video might have a session level limit of 4M for the call overall but have maximum bandwidths of 2.5M for each of the main and content video streams. An endpoint sending them only the main video could do so at up to 2.5M, but when they also wished to send content video it could send up to 2.5M for either main or content video but the two would need to sum to no more than 4M; it might send 1.5M main video and 2.5M content video, or 2M for both, or some other permutation.

Because of this, implementations need to store both the per-media stream bandwidth limit and the overall call limit. If no per-stream limit is set, then it is effectively equal to the overall call limit. And if no call limit is set, then it is effectively equal to the sum of the per-media stream bandwidths. Note that it is best practice to always set a session level bandwidth limit (there are SIP middleboxes that may add one if it is not present, and may cause problems when doing so), but it is not uncommon to not have limits set for individual “m=” lines other than video.

The way “b=” lines are formatted as follows:

b=bwtype:bandwidthNote that there is not meant to be a space before or after the colon, though a parser should be tolerant of whitespace where it is not meant to be.

There are three potential values for bandwidth control:

- AS (Application Specific): This is the maximum total bandwidth of the session or media stream in kilobits per second, inclusive of overheads such as IP packet headers, etc.

- CT (Conference Total): Rarely used in real-time media negotiation, most implementations simply treat this as equivalent to AS when parsing, expressing the maximum bandwidth in kilobits per second. It does have some different semantics when multiplexing multiple media streams onto a single port; make sure to read RFC8859 carefully if implementing support for such multiplexing.

- TIAS (Transport Independent Application Specific): The bandwidth of the session or media stream in bits (not kilobits) per session, excluding non-media overheads for the transport such as IP packet headers. Not part of the core SDP spec TIAS is defined as an extension in RFC3890 but is quite widely supported. It also defines how to calculate the transport overhead and hence the actual bandwidth, but in practice, most implementations ignore this and just treat TIAS values as equivalent either to 1024*AS or a multiplier with some minor extra ‘fudge factor’ for the overhead.

In practice when interoperating with third-party endpoints it is generally safest to use AS in SDP Offers, and either use AS in Answers or use the same type as the far end’s Offer. It is also safest to assume that the far end will treat AS and CT as equivalent and simply scale TIAS up by ~1024 to match.

To learn more about the intricacies of video conferencing, see Rob’s Real-Time Media series: